Week 40 - Quality of life

Normally the big updates are all progress talk. This week there’s some of that, and some other things too!

TL;DR

Week 40 - UI and visualisation

Nine months in

My “let’s try this out for three months” has now extended to three quarters of a year. I didn’t really expect to be here, nine months ago - I assumed that I’d either have something totally rocking out within a few months (unlikely, but you never know) or gone back to a proper job by now. Working with other people has some serious perks that I’m missing, not least of which is a salary, and I didn’t realise how much I’d miss the idle banter and shoulders to lean on. While I’m mostly capable of figuring out anything I need to, sometimes it takes a long time. I’ve got a few community channels that I dip into occasionally, but having friends around you all day was probably the thing I miss most about Boss Alien. That and telling people what to do.

Working for someone else, though - that’s just a pile of muck that I really don’t miss. I’ve had wonderful bosses, terrible bosses and everything in between, but the common thread was that people higher up than me regularly made decisions and choices that I disagreed with (for better or for worse). A decade ago, I became at peace with this - of course priorities vary as you rise the food chain, and corp strat decisions often make a lot more sense if you know what’s happening elsewhere (and what’s coming down the pipe). As time continued, I lost my peace when I gained a good understanding of how these things actually work and realised the decisions were just stoopid a lot of the time. More often, the visibility of why the work was being done was often terrible. I worked hard at BA to make everything transparent (so folks knew why as well as what) but it often felt like an uphill struggle.

And, while I like company, I also like quiet. Control of my environment. It may be freezing here in my garage, but I can turn up to work in my pants if I so desire, and there’s something to be said for that. Probably not too often during December. Once I get a sofa in here, and a bigger screen, it’ll rock for VR presentation.

My skillset and experience open a lot of doors (for now, at least!) I’ve been tapped up for plenty of opportunities since BA, some very interesting, most involving a commute to London (not going to happen before the kids are starving) and all of them working for someone else, growing or fixing or otherwise taking someone else’s baby and making it awesome. I’m good at what I do, but I still don’t want to do that. Soon, probably, but not yet!

So - onwards for the chilly Christmas months, and I’m going to try and turn the scanning feeds into something that other folks can use.

Quality of life

While I’m much happier as a person, I’m finding solo work often leaves me facing a hard problem to solve, which can bum me out. Sometimes it’s a grand idea to step back and see if there’s an easier way or an alternative chunk of work that will make the day better. So, this week instead of hitting on the hard task (camera calibration) I fixed up a load of UI and finally got around to taking a good hard look at the texture quality on the meshes. I also bit the bullet with the networking code and started again, again.

Networking

Being rate-capped at around 100K/s for various reasons was killing my debugging of remote issues, and just generally annoying me, so I threw out the UNET transport code and wrote my own socket stuff, from scratch using this excellent post as a starting point. Pretty much all I need for decent data transfer is the ability to shove a chunk of binary over the wire as fast as possible, and get it as fast as possible at the other end.

I’ve already done a decent job of serialisation (thanks to Flatbuffers) so I got the socket connection working, wrote an object and packet send and recieve queue, put some logic in to gracefully handle dropped packets (just throwing the object away, for now, although retry logic shouldn’t take more than a day or so).

It took a day to get working, and it’s about 15 times faster than the UNET version with super low latency. I wish I’d done this first! For some reason it seemed like it would be a much larger job. Getting it working over anything other than a local LAN will be an issue, but nothing insurmountable (and if I need to scale this properly I can go with something commercial instead). Definitely good enough for now, at least for 2-3 feeds.

UI and the user experience

Being very lazy, my UI on the feeds prototype has been, so far, a single menu with basic buttons on it. As I added in remote feeds, I just copied all the local feed buttons. Saving a mesh, turning on and off Chisel, the status UI - it was all becoming a bit too much. Plus, I wanted to be able to control the visualisation stuff without having to toggle things in the editor at runtime. I also wanted to be able to select which feed I was looking at. And be able to turn on and off pointcloud or texture visualisation. And a moon on a stick.

A day and a bit later, and I’ve added a few new menu screens, some dropdowns for feed selection, and some colour (to show the type of feed, currently).

Mesh texturing quality

The way I’m doing the mesh texturing has a few nasty flaws, which mostly evidence themselves (all other things being perfect) as bad joins along the side of every triangle, which tend to look worse at a distance. It’s bugged me for ages, but not enough to devote any time to. That time finally came on Thursday, when I drilled into exactly why it was looking so crappy.

What’s in the atlas

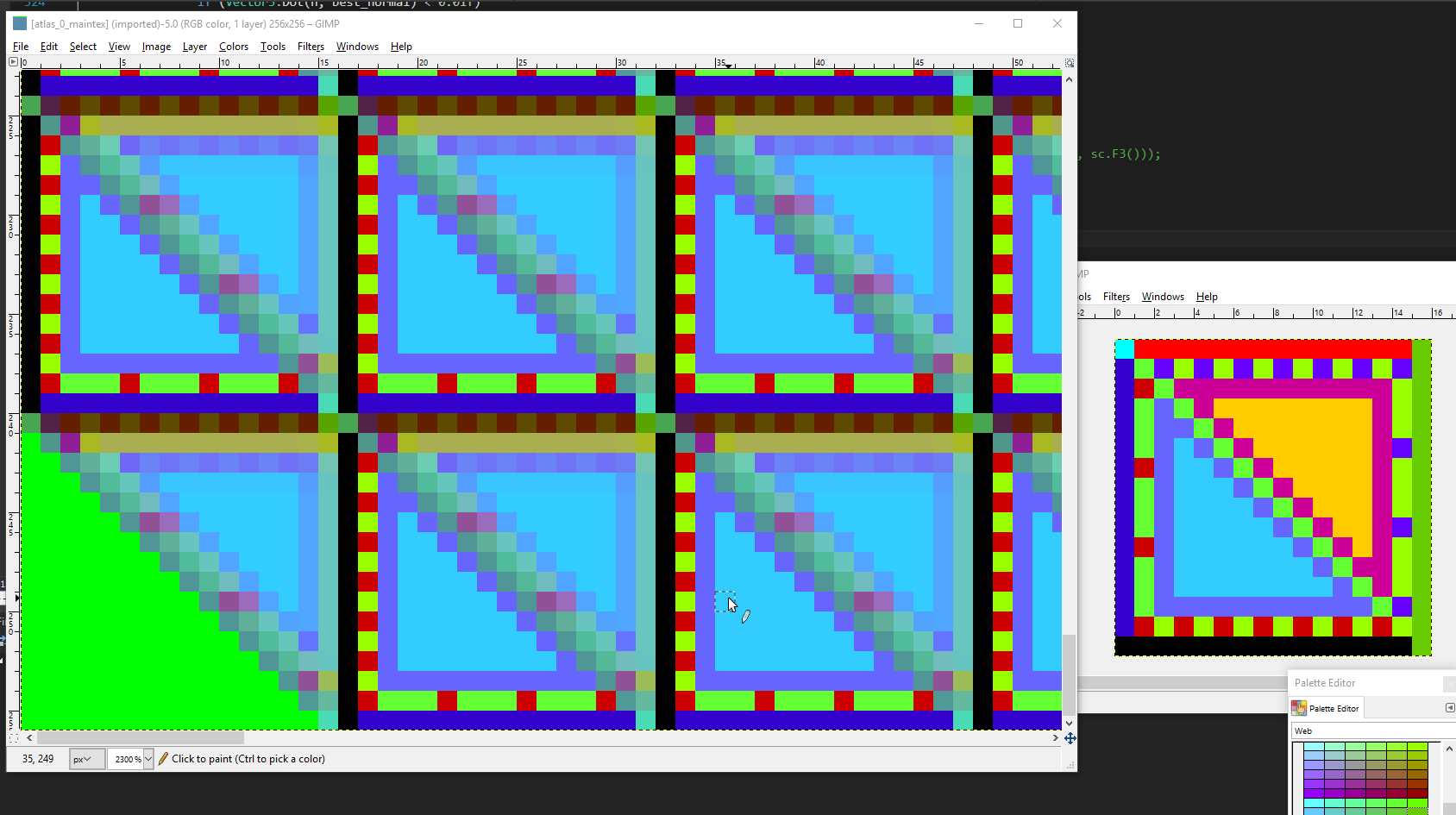

The source camera feed on the Kinect is 1920*1080 (and lovely quality to boot, no crazy camera autoexposure issues so far). I have to take some portion of this image, and blup it (technical term) onto the mesh I create from the depth feeds. This tends to be a triangle 4-10 pixels a side in the source image, going to an atlas triangle that is currently 16 pixels a side. The blit to atlas should be able to retain decent quality (I’m using bilinear filtering only for now) but when the atlas is drawn on the mesh, there’s all sorts of nasty happening along the edges of the triangles. Some of this I’ll simply never be able to fix (there will always be variance between the textures in the atlas which were never there in the source texture if it were directly applied to the triangle).

What I hadn’t expected, before I dug into it, was just how bad the code was that I’d originally written for the atlas blitting.

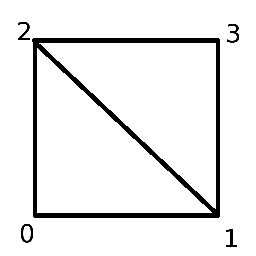

Each atlas texture is made up of lots of triangles. Assuming we just have two triangles in the atlas, they could (in theory) look like this:

![]()

The bottom left and top right triangles share a common edge (the green diagonal) which will always be in one or the other triangle, unless I exclude it from being rendered. Excluding it is the only safe option, assuming I want all triangles to be equal size. That leaves two areas that should be pretty safe to use - the blue triangle and the orangle triangle. I thought that the contents of these two triangles in the atlas would look pretty decent, but where were the bad edges coming from?

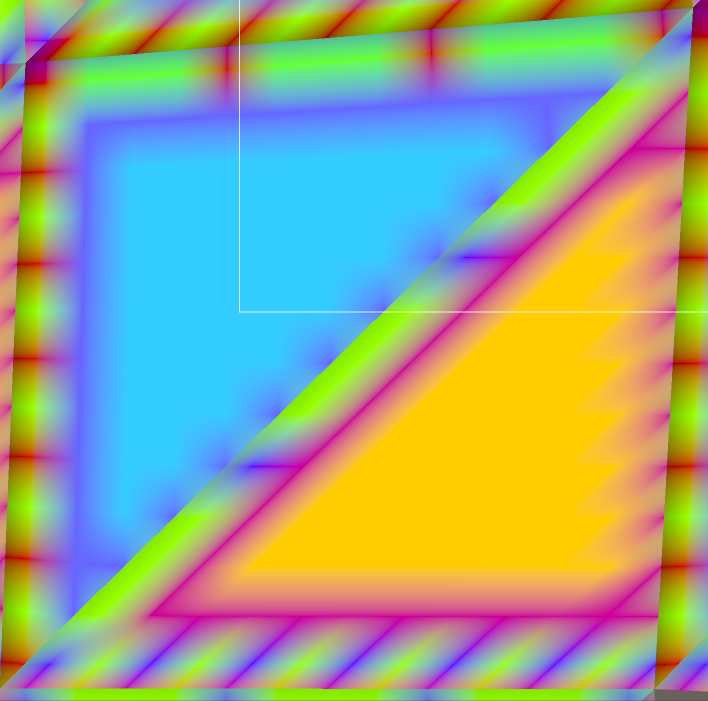

So, I ran a few tests - the first one being “take the blue triangle as the source image (as though it were coming from the camera texture) and stick it in the atlas to see what happens”.

This is the result:

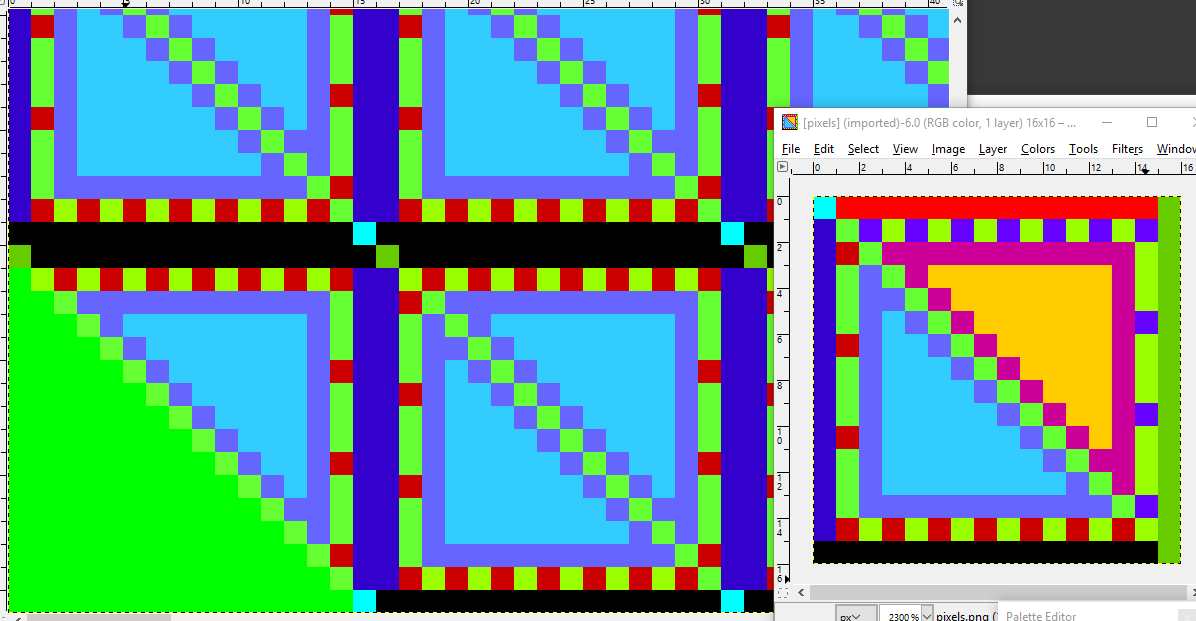

Some really horrible blurring. Yes, bad math. That took an hour or so to fix, resulting in:

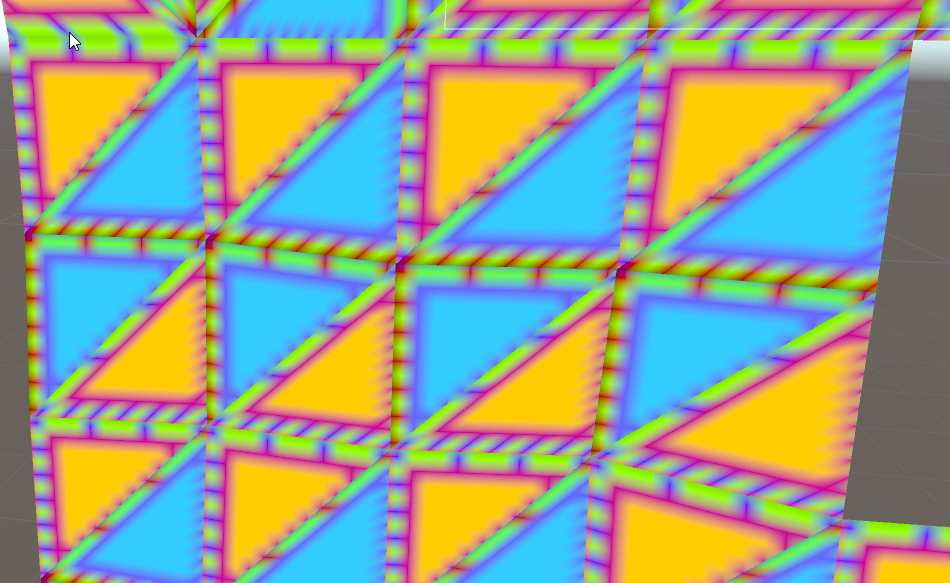

Switching this to use both triangles gave me the atlas result I was looking for:

Switching this to use both triangles gave me the atlas result I was looking for:

But, on the resulting mesh, this highlights which portions of the texture are actually safe to render.

But, on the resulting mesh, this highlights which portions of the texture are actually safe to render.

the mismatches along the edges are obvious:

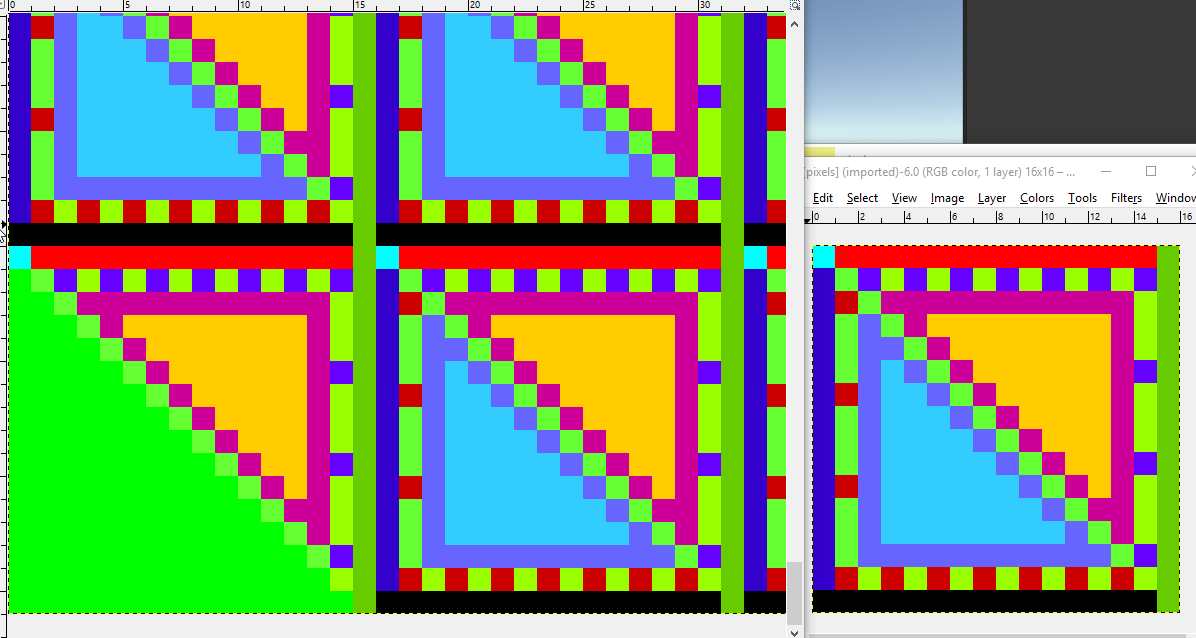

Less obvious, but actually a big part of the “rubbish at distance” is the bleeding that’s happening at the edges of the polygons. In the next image, look at the blue and black fringes (to the top and left of the blue triangle’s green-red border, respectively) and the red fringe at the bottom of the orange triangle’s green-blue border. Less obvious is the green fringe on the blue side of the centre diagonal, but it’s there.

The area I was considering safe just ain’t that safe. I needed to shrink it in another pixel. I also need to figure out a good way to order the source triangle’s incoming verts such that the widest angle on the source goes into the right angle in the atlas. That would help stop the effect visible on the orange triangle, where it’s rendered as a diagonal stretch instead of a horizontal / vertical stretch.

Thinking about it more as I am writing this, I’ve come to the realisation that the vertex order in the marching cubes implementation is also at fault here - I’ve not bothered to look at the vertex order and placement of the triangles previously, as it was “working” - but quads coming out from my meshing are not how I’d like them.

Ideally, with a quad like this:

I’d make up two triangles from vertices 0,1,2 and 1,2,3. The winding order should be clockwise (so that the face shows up) but I also need to match it up to the UV ordering in the atlas. this means I need SharpChisel to generate the first and second triangle such that they’re 180 degree rotations (if the first triangle goes 0,2,1 then the second triangle should go 3,1,2, not 1,2,3 or 2,3,1).

There’s 30 quads in the table of 256 possible cube configurations, which is a few too many to try and hack on at gone midnight, so I’ll probably look at fixing that up next week - but that should get me a lot closer to perfection along the edges.

Still, it’s already looking a lot nicer. Here’s a Kinect scan at 2cm.

Next week …

Back to camera alignment!